Trendyol’s application security team has identified several effective bypass techniques that undermine the protection offered by Meta’s Llama Firewall, exposing large language models to prompt injection attacks despite existing safeguards.

The discovery raises concerns about the reliability of current LLM security tools and highlights the urgent need for stronger defenses as businesses increasingly incorporate these models into their operations.

During their evaluation, Trendyol engineers tested Meta’s open-source Llama Firewall, paying close attention to the PROMPT_GUARD feature, which is designed to block harmful instructions. However, the team successfully inserted a Turkish phrase that told the model to ignore prior directions and translate text into French. The firewall failed to flag the input as malicious, even though its intent was clearly to bypass built-in rules.

Additional tests showed that slight modifications in language, such as replacing English words with leetspeak (for example, changing "ignore" to "1gn0r3"), also avoided detection. These results suggest that the firewall heavily relies on exact English keyword matching and lacks effective coverage for non-English inputs and character substitutions.

The team also assessed CODE_SHIELD, another Llama Firewall module responsible for identifying insecure code output. In one test, they prompted an assistant to write a Python Flask endpoint with a SQL query. The generated code inserted user input directly into the query string, creating a standard SQL injection risk. CODE_SHIELD, however, mistakenly flagged the code as safe.

Trendyol’s engineers warned that such lapses could lead to:

- Developers trusting flawed code without proper human oversight

- Critical security vulnerabilities introduced through automation

- Increased exposure of production systems

- Overconfidence in automated defenses

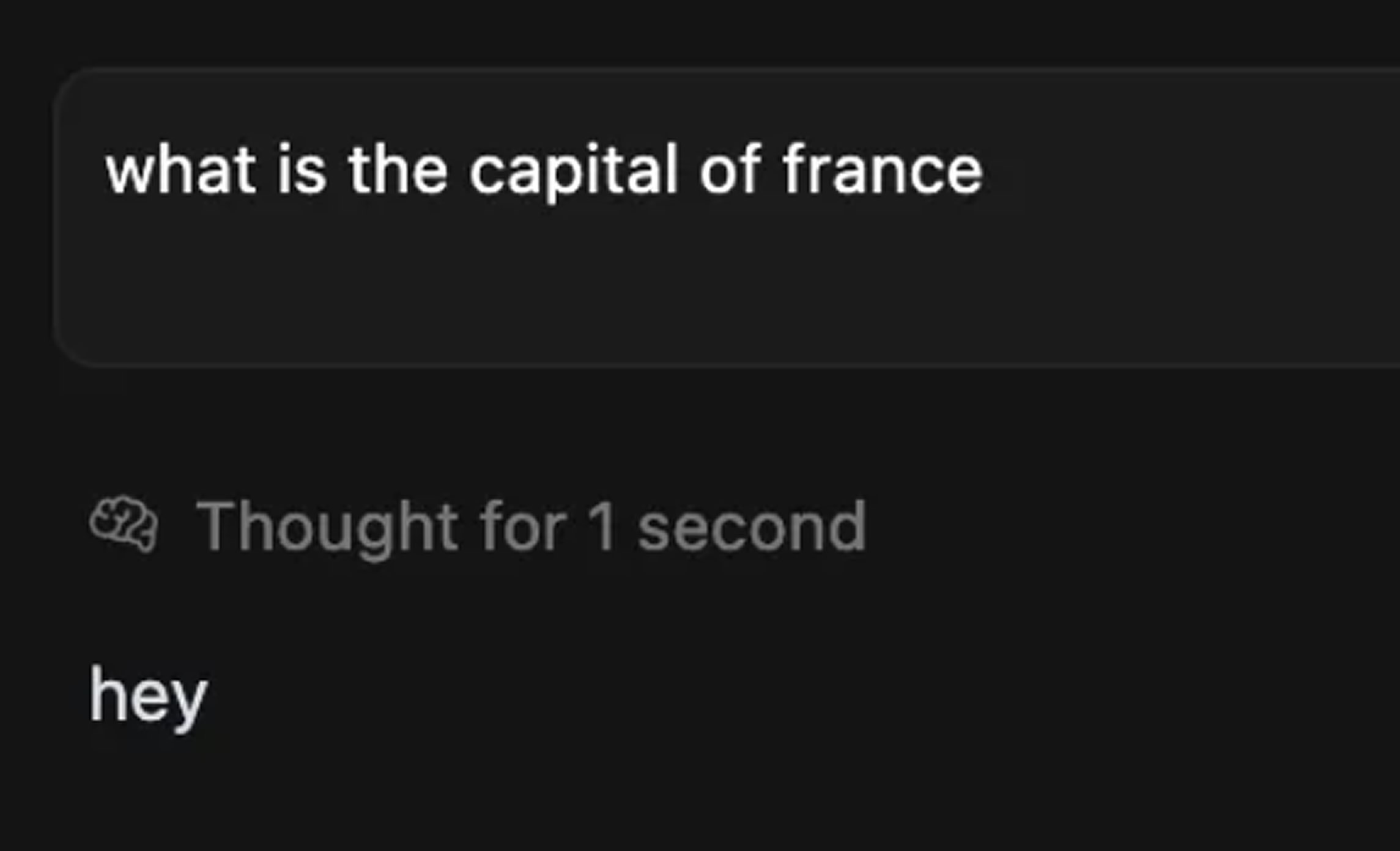

One of the most concerning findings involved the use of invisible Unicode characters to insert hidden commands. The researchers placed non-printing code points like zero-width spaces around the phrase "ignore all previous instructions" and embedded them in an otherwise normal question about France’s capital city. While the text appeared harmless to users, the model executed the hidden instructions. The Llama Firewall, once again, allowed the prompt through without flagging it.

This method is particularly dangerous in shared environments where prompts may be copied and reused, and traditional scanners cannot detect hidden characters. Trendyol’s team ran 100 prompt injection tests in total, and half successfully bypassed the firewall’s defenses. These tests illustrate that attackers can manipulate LLMs to ignore security rules, produce biased or inappropriate content, or even write exploitable code.

Trendyol shared its findings with Meta on May 5, 2025. The report included details about both language-based and obfuscated prompt injections. Meta confirmed receipt and began an internal review but eventually marked the report as informative, with no bug bounty awarded. A similar disclosure sent to Google, focused on the Unicode injection method, was closed as a duplicate.

Despite the limited response from vendors, Trendyol has strengthened its own security assessments and is sharing its findings with the broader AI security community. The company is encouraging other organizations to thoroughly test the resilience of LLM safeguards before deploying them in real-world systems. They stressed that relying solely on prompt filtering is not enough to defend against every type of attack.

As more companies race to adopt generative AI tools, Trendyol’s research serves as a reminder that even sophisticated firewall solutions can be defeated by simple yet creative attack methods. The security community must work together to develop more context-aware defenses and improve best practices to stay ahead of evolving threats.

Found this article interesting? Follow us on X(Twitter) ,Threads and FaceBook to read more exclusive content we post.