Meta Expands Teen Safety Features Amid Ongoing Criticism on Online Harm Protection

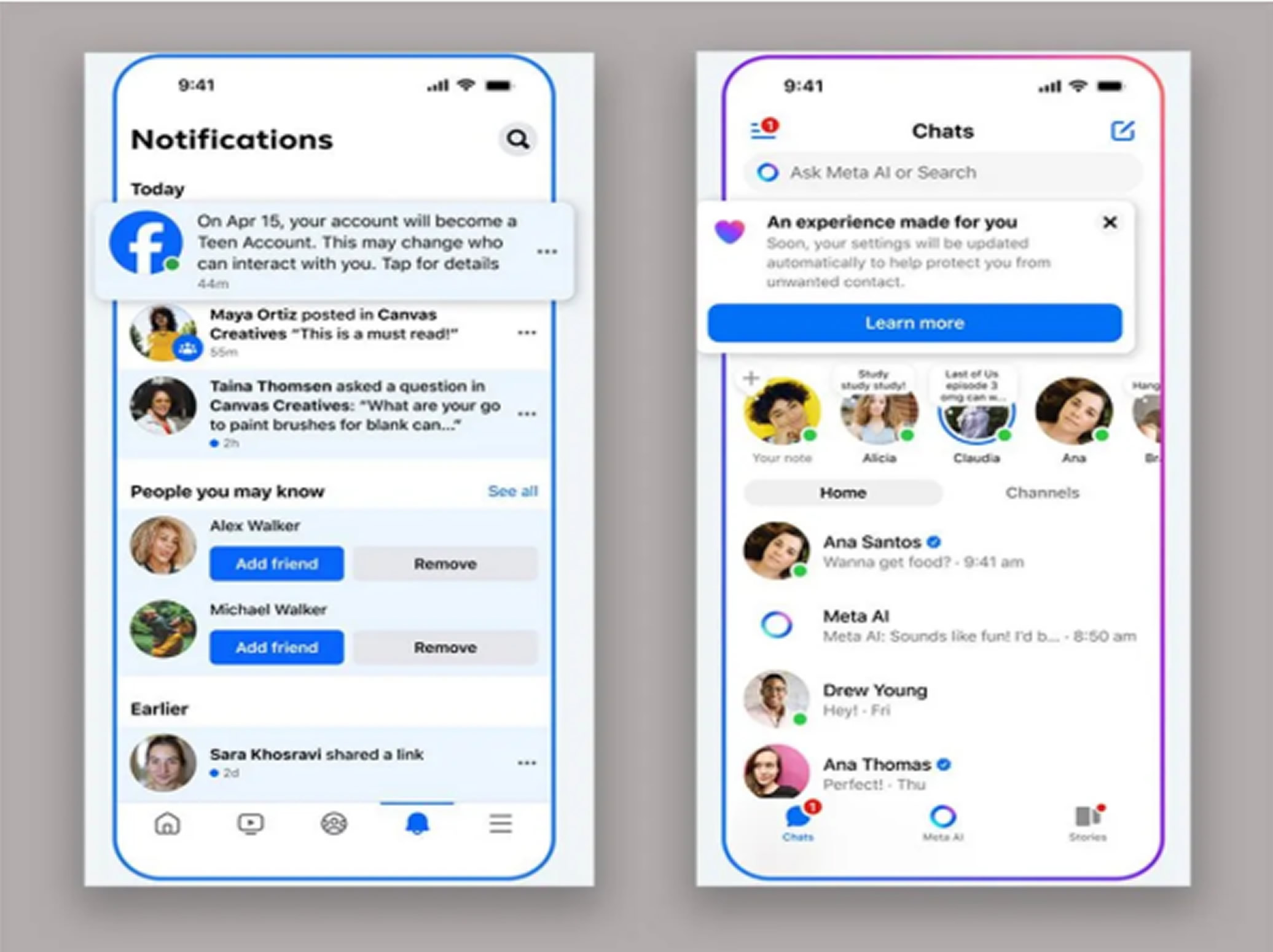

Meta Platforms (META.O) is rolling out its “Teen Accounts” feature to Facebook and Messenger this Tuesday, as the company continues to face growing criticism about its role in protecting young users from potential online harms. These new privacy and parental control measures, which were first introduced on Instagram last year, aim to address increasing concerns over how teenagers are using social media.

The launch of these enhanced safety features is significant as Meta responds to ongoing scrutiny and legal pressures. Some lawmakers are pushing forward with legislative efforts like the Kids Online Safety Act (KOSA) to protect children from online dangers. Meta, along with competitors such as ByteDance's TikTok and Google’s YouTube, faces multiple lawsuits filed by children, parents, and school districts claiming that social media platforms are addictive and harmful to young users. In 2023, 33 U.S. states, including California and New York, filed lawsuits against Meta, accusing the company of misleading the public about the dangers posed by its platforms.

New Features and Parental Controls

The latest update from Meta will require teens under the age of 16 to obtain parental permission before they can go live on Facebook or Messenger. Additionally, the feature that automatically blurs images potentially containing nudity in direct messages will be disabled unless allowed by the parent. These updates will gradually roll out over the next few months, according to Meta’s announcement.

Legislative Context

Meta’s move to expand its teen safety measures comes as U.S. lawmakers continue to push for stronger online protections for children. In July 2024, the U.S. Senate advanced two bills—the Kids Online Safety Act (KOSA) and The Children and Teens’ Online Privacy Protection Act—both of which would hold social media platforms accountable for the effects they have on children and teens. While the Republican-led House chose not to vote on KOSA last year, it hinted at renewed efforts to pass new laws aimed at safeguarding kids from online dangers.

Social media platforms such as Facebook, Instagram, and TikTok allow users as young as 13 to sign up, raising concerns over how these platforms manage the safety of younger audiences.

Found this article interesting? Follow us on X(Twitter) and FaceBook to read more exclusive content we post.