Nation-state threat actors are leveraging generative AI tools to refine their attack strategies,

though they have not yet used them to create entirely new attack methods, according to insights shared at this week’s RSAC Conference.

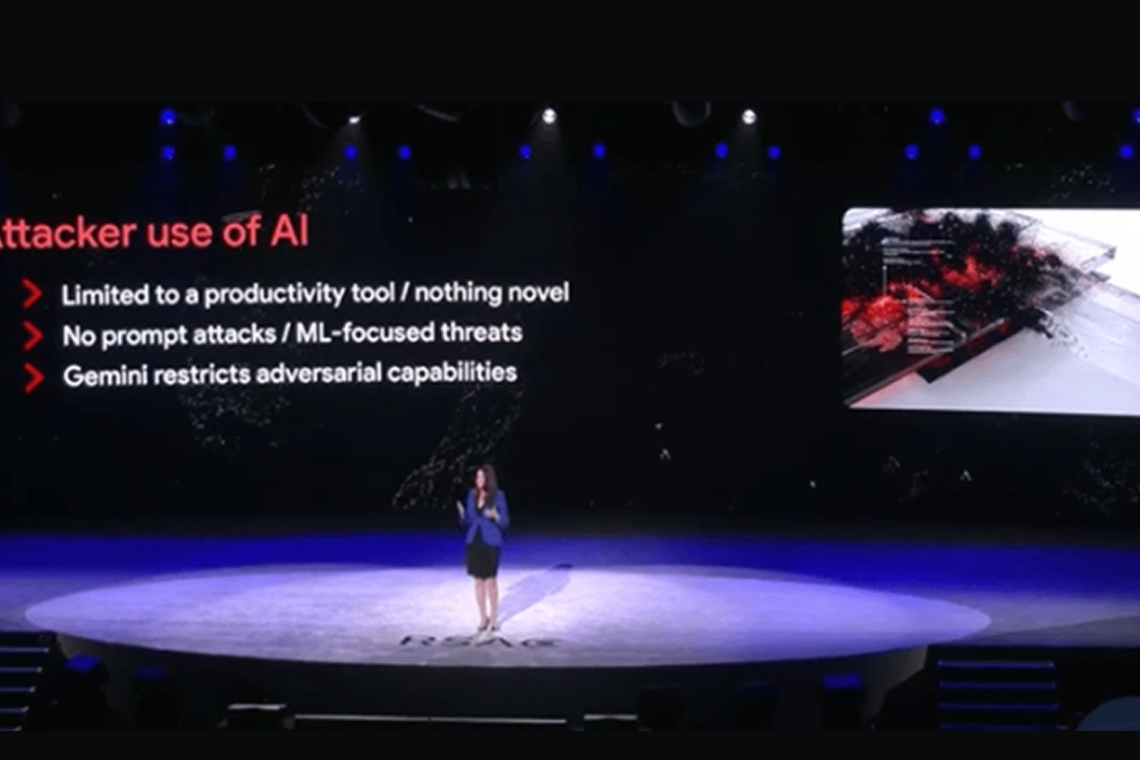

Sandra Joyce, VP of Google Threat Intelligence, explained that while AI is a valuable tool for routine tasks, there have been no signs of adversaries developing novel attack vectors with these models. "Attackers are using GenAI as many of us do, as a productivity tool — for brainstorming, refining their work, and so on," she said during the RSAC 2025 Conference.

The role of AI in cybersecurity was a central theme across more than 100 sessions at the conference, which rebranded from RSA to RSAC in 2022 after becoming independent from its security vendor origins.

Iran, China, and North Korea are the leading nations using generative AI in their cyber operations. Joyce noted that APT groups from over 20 countries have accessed Google’s public Gemini GenAI services, with Iranian threat actors being the heaviest users. Activity from China and North Korea-linked groups was also significant.

Despite Google’s security measures, which restrict malicious use, threat actors continue to exploit Gemini’s GenAI capabilities in four primary phases: reconnaissance, vulnerability research, malicious scripting, and evasion techniques. However, Joyce emphasized that these are existing attack techniques being made more efficient rather than entirely new AI-driven attacks.

Joyce also shared examples of how nation-state threat groups are utilizing GenAI tools. Iranian APT groups have used Gemini to research defense systems, including unmanned aerial vehicles, anti-drone systems, and missile defense technology. North Korean APT groups have focused their efforts on researching nuclear technology and South Korean power plants, including security details.

Threat groups are also turning to GenAI for assistance in malware development. For example, a North Korean APT group used the tool for sandbox evasion and detection of virtual machine environments. Additionally, GenAI is being used to help create phishing campaigns, including localization efforts such as translating to specific colloquial English and developing more convincing personas.

On the defensive side, Joyce highlighted the potential of GenAI to enhance security efforts, such as vulnerability detection, incident workflows, malware analysis, and fuzzing.

At the conference, Cisco's Jeetu Patel introduced the Foundation AI security model, an open-source large language model (LLM) designed for security alerting and workflows. Available on Hugging Face, a multi-step reasoning version of the model will be released soon.

Found this article interesting? Follow us on X(Twitter) ,Threads and FaceBook to read more exclusive content we post.