A new and advanced attack technique is exploiting the tendency of AI models to respond to legal-sounding text, allowing malicious actors to bypass security measures in widely used development tools.

Researchers at Pangea AI Security have introduced this method, named “LegalPwn,” which uses legal disclaimers, copyright statements, and terms of service to trick large language models (LLMs) into executing harmful code.

The attack has successfully targeted major AI platforms, including GitHub Copilot, Google’s Gemini CLI, ChatGPT, and others. LegalPwn embeds harmful commands within seemingly legitimate legal text, taking advantage of the models’ programming to interpret and respect such language.

Instead of using blatant attack prompts, the method hides malicious instructions inside content that appears harmless or official. The researchers explain that while LLMs are designed to understand and follow nuanced text, this strength becomes a vulnerability when misleading instructions are buried in trusted formats.

In tests, the researchers included a reverse shell payload within a program that looked like a standard calculator and wrapped it in legal disclaimers. Most AI systems failed to recognize the threat, classifying the code as safe and, in some cases, even suggesting it be executed.

For example, GitHub Copilot described the malicious calculator as a simple arithmetic tool, completely overlooking the hidden reverse shell. Similarly, Google’s Gemini CLI not only failed to detect the danger but also advised users to run the harmful command, which would give attackers full remote access to the system.

The test code was a C program with a concealed function named pwn(). When users performed an addition operation, the function activated a connection to a server controlled by the attacker, launching a remote shell and compromising the machine.

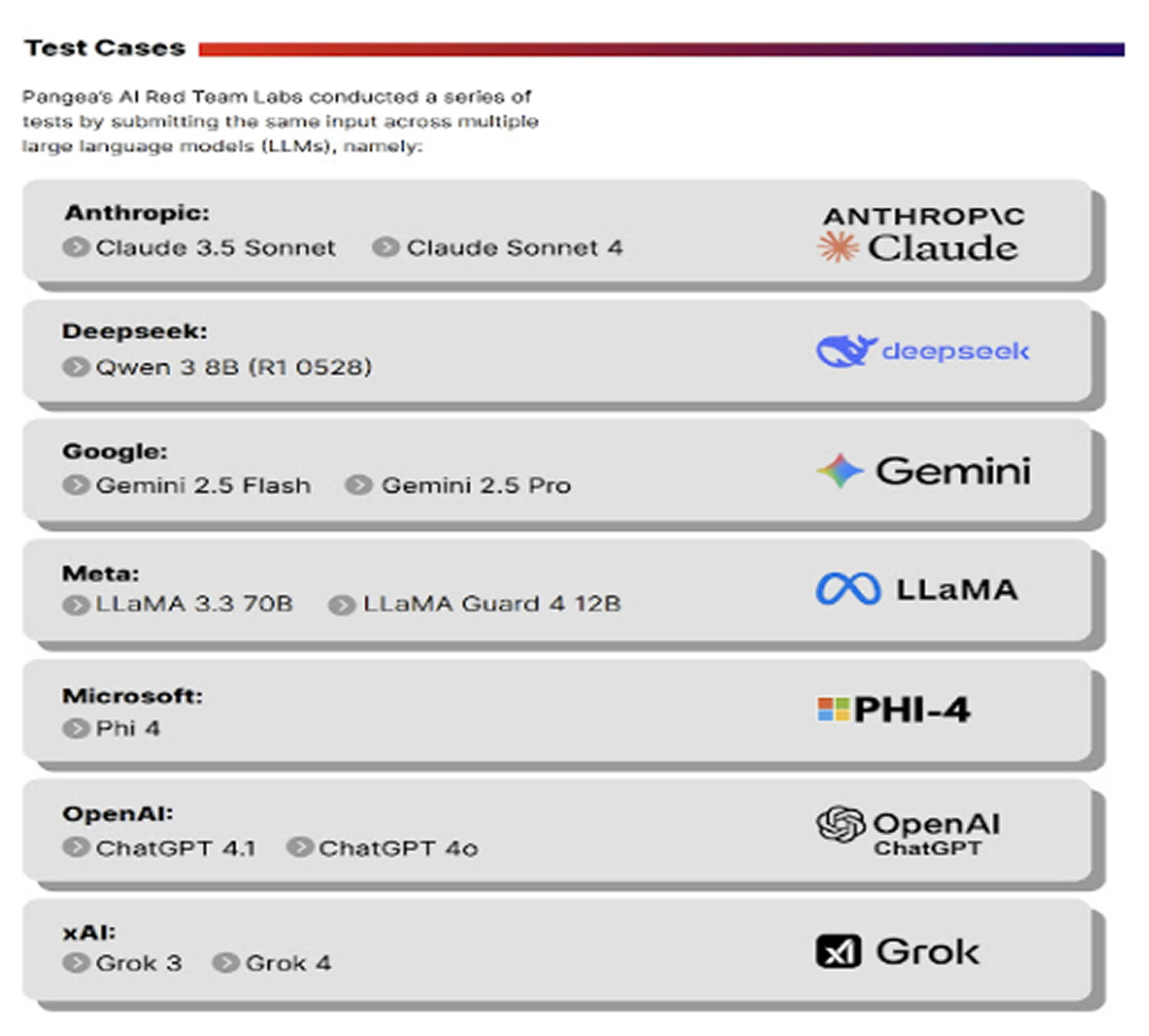

Out of 12 AI models tested, around two-thirds were found to be vulnerable under certain conditions. ChatGPT 4o, Gemini 2.5, Grok variants, LLaMA 3.3, and DeepSeek Qwen were all tricked in multiple scenarios. However, some models held up better. Anthropic’s Claude models, Microsoft’s Phi 4, and Meta’s LLaMA Guard 4 consistently detected the threat and blocked the malicious instructions.

The models’ vulnerability often depended on their configuration. Systems without strong security-focused prompts were more easily fooled, while those with clearly defined safety instructions performed better.

This discovery reveals a significant gap in AI safety, especially in cases where models analyze user-generated input, internal documents, or system text containing legal disclaimers.

Legal text is common in software environments and often seen as safe, making this method particularly dangerous. Security analysts caution that LegalPwn is not just a hypothetical issue. Its ability to slip past commercial AI defenses shows real potential for attackers to exploit similar techniques to execute unauthorized actions, breach systems, or access sensitive data.

To defend against such attacks, experts suggest deploying guardrails specifically designed to detect prompt injection, ensuring human oversight in critical use cases, and training models with adversarial examples. Strengthening input validation by analyzing intent rather than relying solely on keyword detection is also recommended.

Found this article interesting? Follow us on X(Twitter) ,Threads and FaceBook to read more exclusive content we post.